Research Projects

Published:

Project Portfolio

My work is grounded in building trustworthy AI systems that are not only effective but also safe, fair, and respectful of user privacy. I’m especially interested in designing machine‑learning pipelines that are resilient against privacy attacks, robust to adversarial manipulation, and transparent in their decision‑making. This includes working on differential privacy, vertical federated learning, explanation robustness, and bias mitigation in large language models. I approach these challenges with an emphasis on translational impact, aiming to bring theoretical guarantees into practical use, especially in sensitive domains like healthcare and security.

⚠️ Thrust 1 · Adversarial Attacks Leveraging Harmful Content

Objective: This thrust focuses on evaluating how adversarial prompts can exploit weaknesses in content moderation and factual reasoning in large language and multimodal models. It systematically analyzes model vulnerabilities and demonstrates the need for robust, context-aware defenses against misinformation.

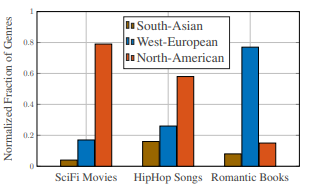

⚖️ Thrust 2 · Fairness & Bias Mitigation in LLM Recommendations

Objective: This thrust investigates how demographic and cultural biases shape the recommendations of large language models across diverse user groups. It quantifies the extent of these biases and demonstrates that context and user attributes can amplify unfairness, while also showing that interventions like prompt engineering and retrieval-augmented generation can effectively reduce bias and promote more equitable AI outcomes.

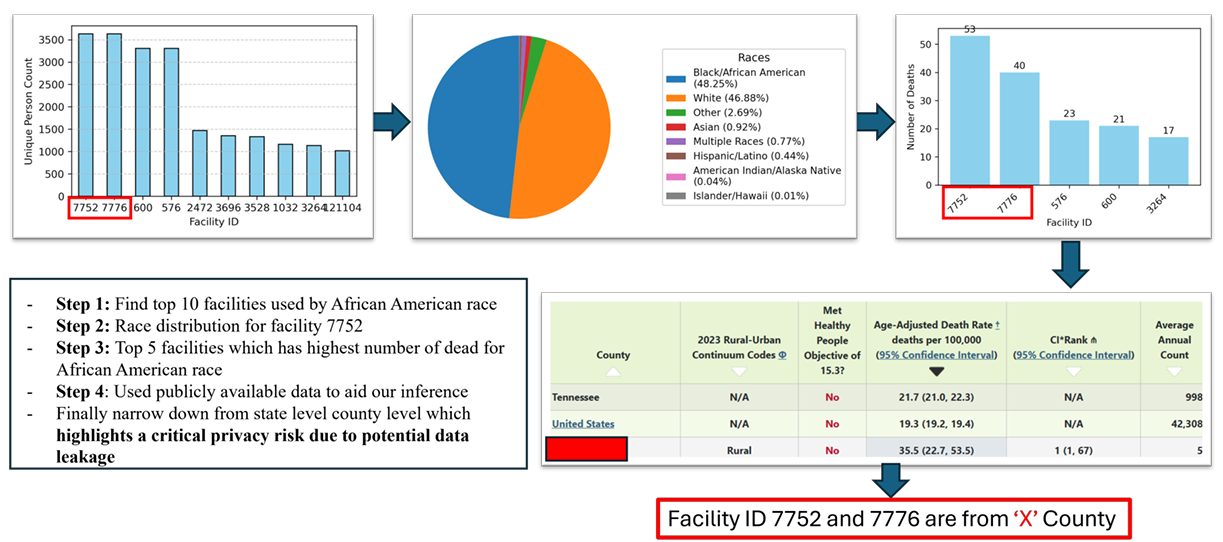

🏥 Thrust 3 · Trustworthy AI for Medical Data: Utility, Privacy, and Hallucination Safety

Objective: This thrust examines how AI systems behave in high-stakes medical environments, where privacy, safety, and clinical reliability must be balanced with model utility. It investigates the tradeoff between utility and privacy in health data workflows, showing how differential privacy, representation learning, and federated approaches can preserve patient confidentiality while retaining diagnostic value. This thrust also explores hallucination risks in medical LLMs by analyzing when and why models fabricate clinically incorrect information and by introducing evaluation frameworks that quantify and mitigate hallucinations in sensitive biomedical contexts.

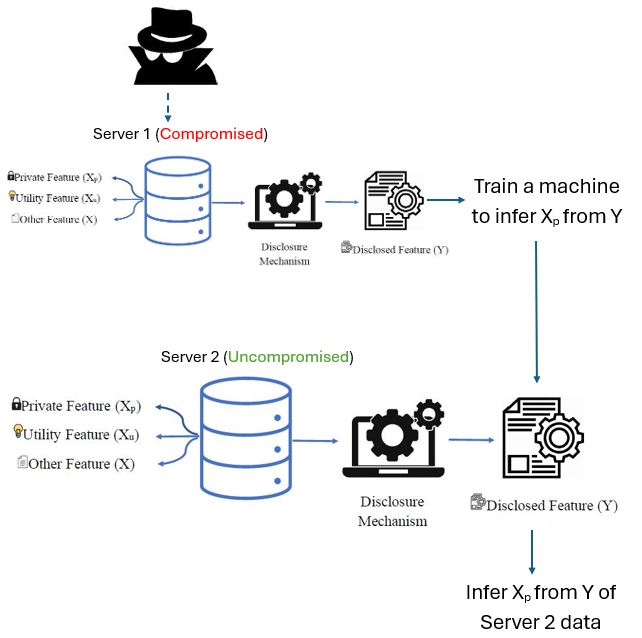

🔒 Thrust 4 · Privacy Leakage Metrics Under Incomplete Information

Objective: This thrust focuses on developing realistic information leakage metrics for privacy scenarios where adversaries have imperfect or incomplete knowledge about the data or the privacy mechanism. Unlike traditional approaches, which assume attackers know the entire statistical structure of the system, these works recognize that real-world adversaries often operate with only partial or estimated information. The thrust systematically introduces new subjective and objective leakage metrics that capture both the beliefs of adversaries and the actual leakage from the system, analyzing and optimizing these metrics for different privacy mechanisms, including extensions to complex, high-dimensional, and non-Bayesian settings.

🛡️ Thrust 5 · Scalable Privacy & Security Solutions

Objective: This thrust develops practical, scalable methods to protect sensitive data in AI and networked systems, including privacy-preserving learning, secure data sharing, and robust key management. It emphasizes solutions that balance strong privacy guarantees with real-world usability and performance.

Feel free to contact me if you’d like to discuss collaboration, mentoring, or any of these topics further.